Should I Build an AI Agent with Copilot?

Question: Microsoft’s agent building options using the M365 Copilot App and Copilot Studio paint the picture of a nirvana agent-building world, but does M365 Copilot deliver the full potential of AI automation?

Short answer: No.

Automating the mundane is one of the most powerful promises of AI. As Copilot becomes a standard part of the Microsoft ecosystem, a question I increasingly hear is:

“Can I build a Copilot agent to do that?”

Raising a suspicious eyebrow, and delivered with a long drawl, I’ll usually answer:

“Mayyyyybe. Let’s talk it through.”

What Is a Copilot Agent?

There’s no single definition of “Copilot.” It’s a marketing umbrella applied to various AI-powered chat experiences embedded across Microsoft products. Depending on licensing, users may have access to Copilots in GitHub, Dynamics, Windows, or even through free offerings like the Copilot Chat app and MSN search.

Some of the apps and services branded with “Copilot”

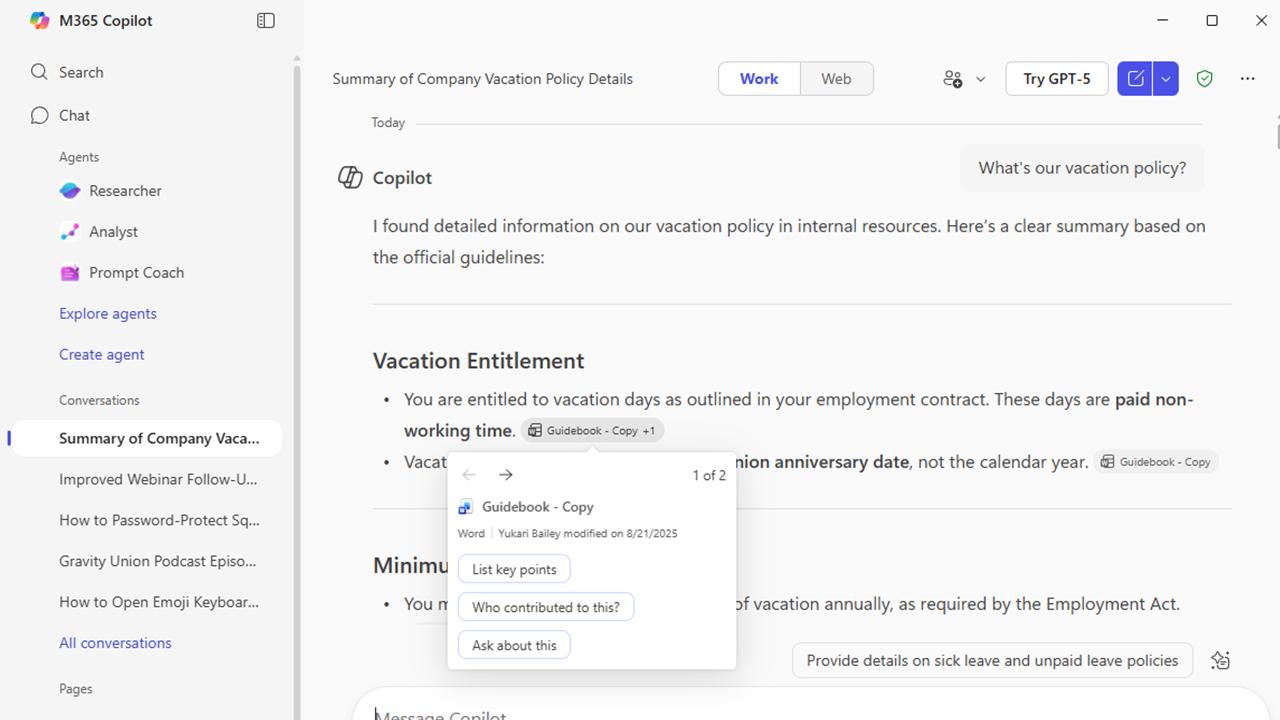

The most relevant offering for enterprise use is Microsoft 365 Copilot—a paid add-on to Microsoft 365 that integrates AI capabilities across tools like Word, PowerPoint, Excel, Outlook, and Teams. It also provides access to:

The M365 Copilot App

Copilot Studio – both places where users can build their own agents

Microsoft 365 Copilot App and Copilot Studio: two places where you can create “agents”

Naturally, organizations want to maximize the value of this investment—especially given how easy Copilot makes building simple agents. But whether you should build with it depends on understanding some important design decisions Microsoft made under the hood.

The Hidden Architecture Behind M365 Copilot Agents

After working on several agent implementations, I’ve come to think of Copilot not as a blank canvas for custom agents, but as a curated experience tightly shaped by Microsoft’s Copilot architecture.

This structure limits what you can control and, more importantly, what you can rely on. Many of these “constraints” are actually thoughtful design decisions from Microsoft that help Copilot scale to address a variety of enterprise use cases, while also operating efficiently—improving response time and reducing AI-related processing costs.

The design decisions constrain what’s possible with Copilot agents. Agent builders should know:

Microsoft Chooses the AI Model, Not You

Behind every Copilot experience is a model—or more accurately, a routing system that selects a model. As explained in Microsoft’s Copilot Transparency Note:

“Microsoft 365 Copilot uses a combination of models provided by Azure OpenAI Service... to match the specific needs of each feature—such as speed or creativity.”

This design choice is efficient and scalable: Microsoft can send straightforward tasks to lighter-weight models, preserving computing resources, while routing more complex tasks to more advanced models like GPT-4 or GPT-4o.

However, from a builder’s perspective, this lack of model control has major consequences:

You cannot specify which model handles your prompt.

You cannot guarantee the same model is used day to day.

Microsoft may update or swap models at any time, without notice.

Model selection is foundational in AI agent design. When you lose the ability to fix or tune the model, you lose the ability to ensure consistent quality over time and subtle prompting differences can make more impact than they should.

Personalization Takes Priority Over Accuracy

Another critical design choice: Microsoft emphasizes personalized results, not always accurate ones. As they explain in their Transparency Note:

“In many AI systems, performance is defined in relation to accuracy... With Copilot, we're focused on Copilot as an AI-powered assistant that reflects the user's preferences.”

This means Copilot is designed to adapt to the individual user’s behavior, memory, and Microsoft Graph profile—personal calendars, documents, chats, etc.—to generate tailored responses.

The challenge? Agent builders have no control over this personalization layer. You can’t:

Predict how different users will receive different answers to the same prompt

Influence how memory or past messages are used

Benchmark or evaluate agent quality in a consistent, testable way

This personalization works well for individual productivity tools. But for repeatable business workflows or agents that must deliver consistent answers across users, this can be a serious limitation.

Grounding Content Is Simple on the Surface, but Opaque in Practice

One of the most appealing aspects of M365 Copilot is its native integration with enterprise content: Word docs, SharePoint files, emails, and Teams chats. It appears to “just work.”

However, what seems simple masks significant complexity.

To ground an LLM’s response in your content, Microsoft does several things behind the scenes:

Converts documents into formats optimized for semantic search

Extracts metadata and context to inform search and ranking

Prepares data using proprietary indexing and vectorization logic

In typical AI agent development, this data prep is a key stage. Builders make intentional choices about how to clean, structure, index, and rank content—decisions that directly affect output quality.

With Copilot, all of this is abstracted. You don’t control how:

Documents are parsed or tokenized

Vectors are constructed or embedded

Semantic relevance is calculated

Further, Microsoft imposes technical limits:

Document size restrictions

Supported file types

Limitations on how many files can be indexed

These constraints often mean Copilot agents will miss relevant content—either because it was filtered out, misinterpreted, or simply not indexed.

RAG, and Why It Matters

When Copilot retrieves content to answer a prompt, it uses a method called Retrieval-Augmented Generation (RAG). This technique sends a package of relevant text snippets to the model as context, which the model uses to generate its answer.

Importantly:

The LLM is not trained on your internal content.

Instead, RAG provides just-in-time knowledge injection based on prompt analysis and search.

RAG design is one of the most powerful levers in agent quality. In a custom-built system, you can:

Choose search techniques (semantic, keyword, hybrid)

Select how much content to include

Apply logic to prioritize which sources are most trustworthy

Chain or parallelize prompts with specialized routing logic

With M365 Copilot, all of this is hidden and locked down.

Most notably, Microsoft limits the volume of content sent to the LLM—typically around 10 to 20 documents per prompt. This cap makes Copilot poorly suited to questions that require:

Large-scale summarization

Deep cross-referencing across content sets

Broad situational analysis or timelines

You may see this limitation surface when, for example, you ask Copilot to find all emails you missed after a vacation and it returns just a few.

No Access to Model Tuning Parameters

Another constraint is the lack of model configuration settings.

In tools like Azure OpenAI Studio or AI Foundry, you can adjust model behaviors using parameters such as:

Temperature (controls creativity vs. predictability)

Top-p / Top-k sampling (affects randomness and diversity)

System prompts or instructions (guides tone and format)

These options let you fine-tune how the agent responds—whether you want it to summarize conservatively, rephrase content in a particular style, or apply structured output formatting.

With Copilot, these parameters are preset and unavailable. For agent builders who care about consistency, control, or domain-specific tone, this is a major limitation.

Considerations and options

To recap, M365 Copilot is designed to be accessible and easy to use. But it comes with serious tradeoffs for anyone looking to build high-performing agents:

Model selection and updates are controlled by Microsoft

Personalization introduces variability across users

Grounding content is opaque and difficult to optimize

RAG processes are fixed and limited in scope

No access to model settings limits tuning and iteration

This doesn’t mean Copilot is useless—it simply means you need to match your use case to the tool.

When Copilot Might Be the Right Fit

Stick to simple scenarios with clear boundaries and limited complexity, such as:

Summarizing a single document or small document set

Performing light data extraction from emails or SharePoint

Answering straightforward questions using indexed M365 content

Editing, repurposing, or rewriting text within familiar workflows

Guiding users through short, structured workflows (e.g., form completion, checklist generation)

If your agent use case requires nuance, multi-document reasoning, deep contextual understanding, or high reliability across users—Copilot is likely not the best choice.

Better Options for Complex Agent Scenarios

For richer agent-building capabilities, Microsoft provides alternatives like AI Foundry—a toolkit that allows builders to:

Swap between available AI models to test response quality

Access fine-grained RAG controls

Customize data ingestion and content preparation

Tune models using open parameters

Integrate hybrid workflows and orchestration logic

The Azure AI Foundry start page with AI models to explore. You can find it here: Azure AI Foundry

Other frameworks from AWS, Google, and open-source ecosystems also provide high levels of customization and control.

If you’re investing in agents that play a meaningful role in business operations, these tools are better suited to the job.

Not Sure Where to Start? We Can Help.

If you're new to AI agent development or still exploring what Copilot and other platforms can do, you're not alone. This space is evolving quickly, and the right starting point isn't always obvious.

At Gravity Union, we help organizations navigate this complexity. Whether you're experimenting with your first use case or ready to design a broader AI architecture, we can support you in:

Identifying use cases that align with your goals and existing systems

Evaluating tools and platforms for fit, including Copilot, AI Foundry, and others

Prototyping and testing agents before you scale

Building, deploying, and refining an agent from start to finish

Our goal is to help you move from idea to implementation with clarity and confidence—whether you're solving a one-off problem or laying the foundation for long-term AI adoption.